HARP v2.0 is here!

You can download it here: HARP_v2.0.tar.gz

(for compile and run instructions, please see Tutorial HARP v2.0).

HARP v2.0 comes with significant code extensions at numerous places. So whats the difference to HARP v1.0?

Each video codec has a Rate-Distortion Optimization, short RDO, which tries to find an optimal balance between rate (the disc space required for the video) and distortion (the quality of the video).

With the advance of the HEVC/H.265, it becomes evident that considerable CPU time is consumed by modern RDO, simply because endless sets of new intra/inter modes, partition sizes and filter options need to be checked during encoding. New resolutions like 4K or even 8K intensify the computation complexity. An hardware encoder in any embedded device like smartphone or tablet needs to conduct this RDO in order to offer HEVC/H.265 encoded bitstreams.

As a result, System-on-Chip (SoC) vendors are optimizing the RDO parts of their HEVC IP cores to still guarantee real-time encoding of HD and 4K resolution. However, a considerable part of the speedup achieved by optimizing the RDO simply comes from "skipping checks". Imagine a complex tree of thousands of possible RDO checks, of which whole branches are simply cut off because several indicators like Hadamard costs or spatial/temporal neighborhoods indicate that these checks will not yield beneficial results. This skipping leads to significant speedups, but typically come with loss of rate (i.e. increased disc space or degraded video quality). More sophisticated skipping strategies based on statistical measures and machine learning may offer high speedups while still maintaining near-optimal RDO decisions. However, gathering the required statistical RDO information in the HEVC implementation HM is difficult, simply because of extensive use of recursion, tree-like data structures and the difficulty to serialize/deserialize custom data structures and buffers in C++.

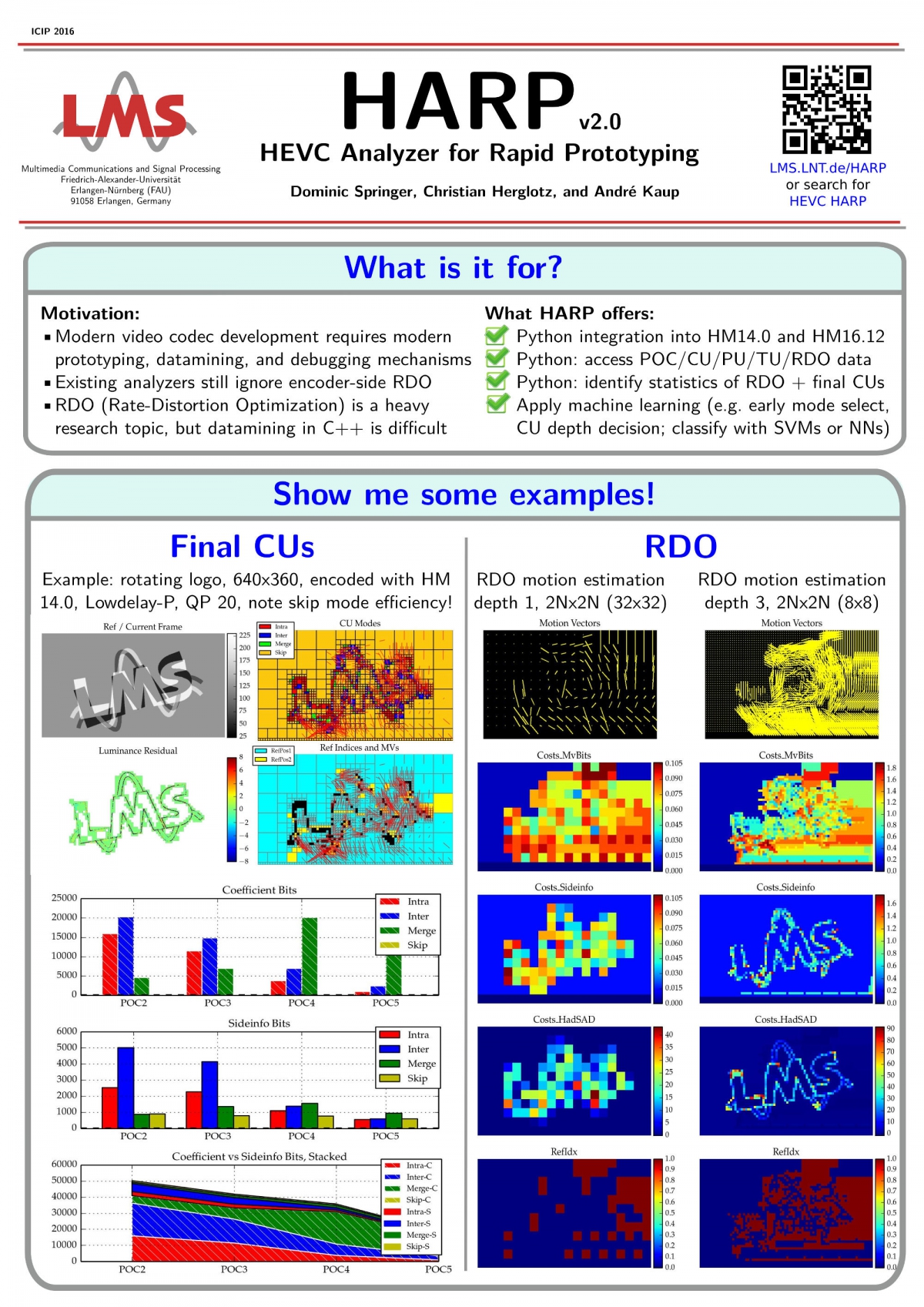

This is where HARP v2.0 comes into play. It will log each individual RDO decision checked throughout the RDO process, at a detail level you specify. The potential is manifold:

- Investigate your new encoding tools in the scope of the RDO, and find further optimization potential ("Why did other RDO checks win, exactly?")

- Test sophisticated, multi-objective cost functions and cost weighting

- Apply machine learning to speed up RDO! The basic idea is to get rid of simple thresholding and statistics, and apply all those interesting new ML approaches and insights. Note that numerous authors have proven that this can in fact be conducted with high speed, using SVMs or Neural Networks. A good start is this http://link.springer.com/article/10.1186/1687-5281-2013-4 (Open Access!) and this http://ieeexplore.ieee.org/document/7070704/.

- Or, hey, simply analyze the RDO tree, out of curiosity :)

All RDO data can be accessed "online" (during encoding) or "offline" (after the bitstream is created), from within C++ or Python. We strongly encourage you to use Python for your first HARP steps as well as your first HEVC experiments, since Python comes with the simplicity and intuitivity of an interpreter language. If you slightly change the code, you can run whatever Python function you can think of, right inside the RDO during encoding. Numerous examples are provided with the download package, in order to get you started.

For all of you who are currently at ICIP2016 in Phoenix, AZ (25-28. Sept. 2016): we will present HARP in the scope of a Visual Showcase presentation, from 10:30 to 12:00 in Room 301 CD, on Wednesday 28th September. We are looking forward to your visit!

Dominic and Christian

dominic[DOT]h[DOT]springer[AT]gmail.com

christian.herglotz@fau.de